Who can you really trust? This is the critical question to be asked. This question applies to most fields of life and especially to crypto whose ethos is “Trust no one”.

Last week, a thought leader (and former CTO of Coinbase) pointed out that Apple and Google might represent a systemic risk to crypto.

In that very same week, Ledger’s users discovered that they need to trust the integrity of Ledger’s firmware updates.

In this blog we look at the topic of minimizing the trust-scape: Reducing the trust you put in other entities with your crypto and avoiding systemic risks. We will also highlight a counterintuitive realization: In most cases, eliminating the need to trust actually requires adding more parties to your solution, not reducing them.

Therefore, for crypto wallets, more parties usually means more security, and wallets with no single point of failure – especially MPC or MultiSig solutions – will be more secure by default than wallets reliant on trusting a singular entity, whether those wallets are software or hardware.

MPC wallets like Zengo solve all types of systemic risks including the aforementioned Google or Apple ‘backdoor risk’ by design.

At the crossroads of minimizing the trust-surface

The problem of putting trust in operators was not created by crypto wallets, but is as old as time.

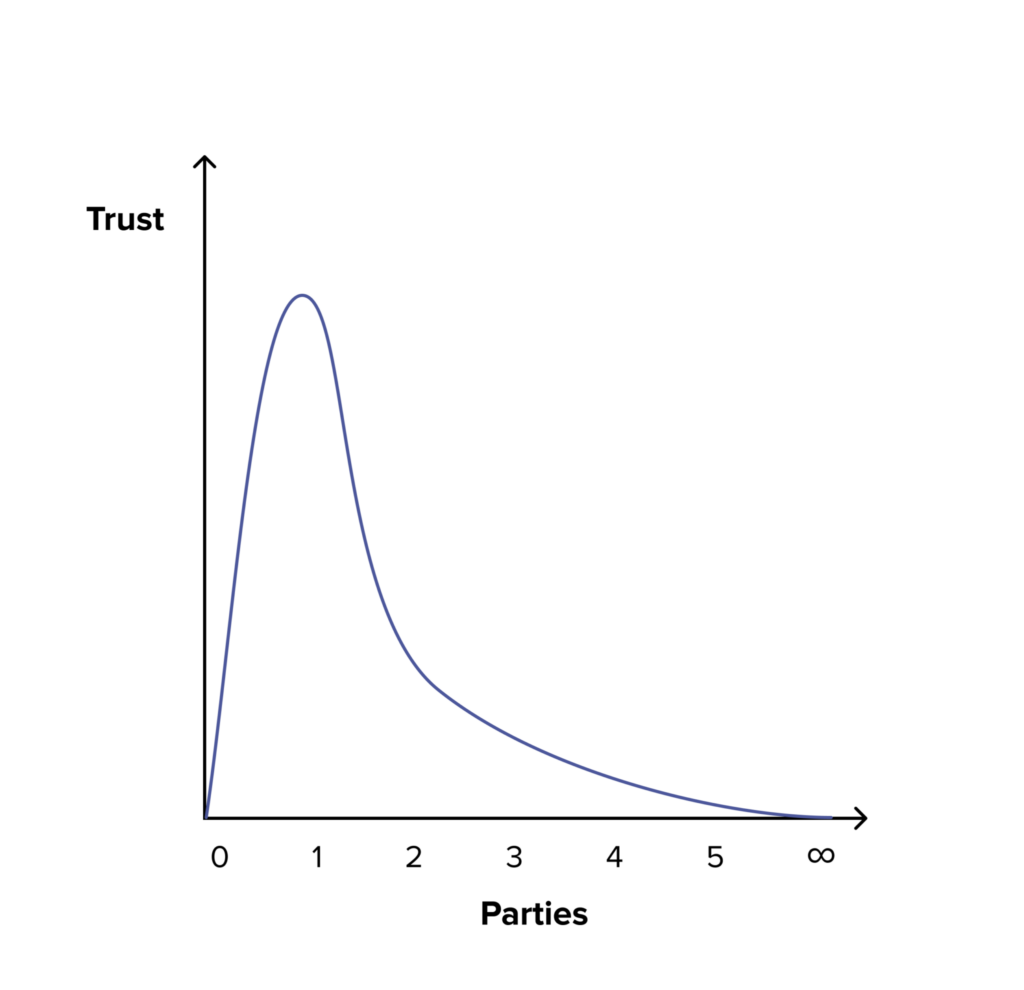

The amount of trust as a function of the required number of controlling parties is non-monotonic (i.e. generally you cannot say that “more is better” or “less is better”, as zero parties are better than a single party, but two parties are better than one party too) as shown in the sketch below.

Trust as a function of the cardinality of the required parties

As is illustrated in the sketch above, the best case for minimizing trust is when no party has control over a mechanism, as there is nothing to be trusted. In sharp contrast, the worst case is when just one party has full control: If this single party becomes rogue, nothing can stop it.

However, by adding more parties and distributing responsibility, the amount of required trust rapidly decays (quadratically, due to the handshake problem) – as these independent parties would now need to collude with all others for an evil scheme to succeed.

By default, most mechanisms are operated by a single party. As we have just seen, this is the worst number of operators. This has also been proven in practice many times in centralized systems. Therefore, when system designers want to minimize trust in a system, they need to make a choice between two opposite options: They can go in the path of reducing the number of parties from one to zero, or go in the very opposite direction and add more parties.

Reducing to Zero: The hardware wallet failure

Hardware wallet users went down the path of reducing controlling parties to zero to minimize the crypto “trust-surface” – they also believed this to be the narrative as projected, whether intentionally or not, by hardware wallet companies. They believed that when they used such devices, their private key was “protected by hardware,” meaning that even if a vendor were to go rogue and update the hardware with malicious firmware, this malicious firmware would be physically incapable of accessing the private key. If this was true, then it would have eliminated a very important piece of the trust-surface, as the private key was safe with immutable hardware, eliminating any need of trust in any other party.

However, when Ledger introduced their recovery feature that literally sends a version of the hardware wallet’s private key over the internet, it uncovered this was really a misconception: That a private key is not physically protected from a firmware update. In doing so, Ledger succeeded in breaking a steadfast narrative, violating the perceived qualities of hardware wallets.

Users learned that their costly “Hardware wallets”, including Ledger’s, were merely “Firmware wallets“, no safer from rogue updates than regular software wallets. This harsh revelation provoked a major uproar among hardware wallet users.

This kind of false belief in the immutability of a particular solution is not exclusive to this incident, but in fact represents a repeating theme in crypto. Users and systems designers are often tempted by the “Zero-Price Effect“ of eliminating a controlling party altogether and replacing it with some immutable fail-free solution. Users falsely rely on the immutability of a critical part of the solution (hardware, smart contract, etc.) as a way to minimize the trust-surface, only to find out that in the real-world, these parts cannot really be immutable as they require some maintenance and in fact their users still need to trust some administrator to not become rogue and change these parts functionality. Therefore, in many cases, users choosing this path of “zero parties” in hope to get to the best-case scenario will find themselves ending up back in the “single party” worst case.

Adding more parties: The Two-man rule

Conversely, users can choose to solve trust issues by adding more independent parties, thus limiting the trust or blind reliance on any individual party. This option is not new of course, as the need to minimize trust in operators of sensitive systems, like nuclear weapons and cryptographic materials, has always existed. The U.S. Air Force calls this the “two-man rule”.

According to Wikipedia, “The two-man rule is a control mechanism designed to achieve a high level of security for especially critical material or operations. Under this rule, access and actions require the presence of two or more authorized people at all times.”

In order to truly apply the two-man rule in crypto, we have to eliminate all “Single Points of Failures” (SPOFs). Therefore, the main ( but not only) SPOFs that need to be eliminated include the private key and its backup: The notorious seed phrase.

Some systems today already do just that, such as multi-signatures (MultiSig) solutions for Bitcoin, or smart-contract Account Abstraction (“AA”) based MultiSig solutions for Ethereum and EVM environments. In such solutions, multiple private keys are required to authorize a transaction, thus eliminating the SPOF of any single private key.

However, it should be noted that such systems still require further scrutiny to make sure that the different parties are as independent as possible in order to avoid systemic risks. For example:

- If all private keys of a MultiSig system are stored on the same vendor’s firmware solution (e.g. Ledger) , then they remain as exposed to the aforementioned rogue recovery update attacks as they did before.

- If all private keys of a MultiSig system are stored on the same vendor’s platform solution (Google or Apple), then they are still vulnerable to government-induced backdoors as suggested in the tweet above.

- If all private keys of a multisig system are kept in the same physical place, then they are probably as exposed to physical threats (fire, theft, collapse) as before.

Having said that, multi-party architecture shows a clear path for solving such potential systemic risks: If you are concerned with certain party integrity, such as the aforementioned fear of Google or Apple, the way to mitigate this risk is not by removing them from your solution altogether, but rather to ensure responsibility of any critical function is not centralized by them, but rather divided to other parties as well.

MPC wallets follow the Two-man rule – by design

MPC wallets like our ZenGo wallet follow the same “Two-man rule” methodology and aim to eliminate SPOFs within the product. MPC creates a MultiSig-like solution at the mathematical level and ensures that the different shares (or shards) that represent the key are distributively created and stored by different parties: They never reside in the same place, and instead are generated in and by separate, orthogonal systems (Details in our MPC white paper).

ZenGo’s solution consists of two MPC parties: The user’s device and the remote ZenGo server, thereby significantly reducing systematic risk as each party consists of:

- Different hardware and software architecture: Mobile devices and cloud servers

- Device ownership: Users and ZenGo

- Physical location: User pockets and a remote cloud datacenter

The SPOF of the seed phrase backup is eliminated too and replaced by a simple and secure 3-factor recovery process, in which each authentication element has redundancy (more here).

In contrast to the aforementioned MultiSig solutions, MPC solutions are not limited to a specific blockchain, but are multi-chain by design and ZenGo itself supports multiple blockchains and hundreds of different assets.

To illustrate the effectiveness of the ZenGo MPC model, let’s consider the aforementioned threat of mobile platforms (Google and Apple) becoming rogue and leaking the users’ shard of the secret. Even if they did so, it would still not give away the private key as half of the system (the user’s remote server share) is out of the reach of these platforms, and would require further collusion to succeed, greatly reducing any platform’s motivation to become rogue in the first place.

Conclusions

Winston Churchill is credited with saying “Never let a good crisis go to waste.” We should use this recent failure of Firmware Wallets as an opportunity to rethink and update our security assumptions and shake off our old misconceptions.

Such rethinking should include our attitude towards minimizing trust. We should realize that sometimes more is indeed more, and systems with more parties and redundancy, that adhere to the battle tested concept of the “Two man rule”, including MPC wallets, are actually more secure and trustworthy than the classic Single Point of Failure solution that are popular today.

Reach out us with comments or thoughts:

- Via [email protected]

- On Twitter: @ZenGo

Learn more about ZenGo X, our open-source MPC library, and github here.